Add page objects to AI spiders#

In this chapter of the Zyte AI spiders tutorial, you will extend AI spiders with custom code.

AI is not a perfect solution. Eventually, you will find that it does not extract some specific field from some specific website the way it should. And you do not need to wait for us to fix it if you are in a hurry, AI spiders are designed so that you are always in full control.

The first step to customize AI spiders is to download them. Our AI spiders are implemented as an open-source Scrapy project, so you just need to download that project, customize it, and run it wherever you want, e.g. locally or in Scrapy Cloud.

Get AI spiders#

Before you can customize AI spiders with code, you need to download their source code and make sure you can run it locally:

Install Python, version

3.10or higher.Open Visual Studio Code, select Source Control › Clone Repository from the sidebar, and enter the following URL:

https://github.com/zytedata/zyte-spider-templates-project.git

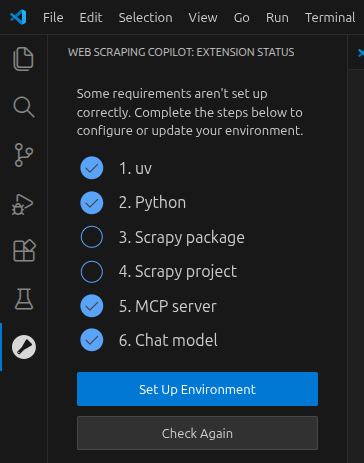

Select Web Scraping Copilot on the sidebar, and under Extension Status, complete the setup steps.

On the Python step, select Switch Interpreter › Create Virtual Environment › Venv and then select an installed Python executable.

Select View › Terminal and run:

pip install -r requirements.txt

Add a new line to

zyte_spider_templates_project/settings.pyto set your Zyte API key:ZYTE_API_KEY = "YOUR_ZYTE_API_KEY"

If you have followed these steps correctly, you should be able to refresh the Spiders view in the Web Scraping Copilot sidebar and run the ecommerce spider template with the following Arguments:

-a url="https://books.toscrape.com/catalogue/category/books/mystery_3/index.html"

-a extract_from=httpResponseBody

-O items.jsonl

With these parameters, you are effectively running the same code as your last virtual spider.

The extracted data will be stored in an items.jsonl file in JSON Lines format, i.e. it should contain 32 lines, each with a

JSON object ({…}).

Fix product parsing#

The virtual spiders that you created for https://books.toscrape.com/ do not extract all aggregateRating nested fields. You will now fix that by writing custom code that parses that bit of data out of the corresponding HTML code.

AI spiders are implemented using web-poet, a web scraping framework with Scrapy support that allows implementing the parsing of a webpage as a Python class, called a page object class.

You can configure these page object classes to match a specific combination of URL pattern (e.g. a given domain) and output type (e.g. a product), and whenever your spider asks for data of that output type from a matching URL, your page object class will be used for the parsing.

To implement this custom parsing:

Add number-parser to

requirements.txt, ideally with a pinned version, e.g.requirements.txt#number-parser==0.3.2

Re-run the command to install requirements:

pip install -r requirements.txt

Create a file at

zyte_spider_templates_project/pages/books_toscrape_com.pywith the following code:zyte_spider_templates_project/pages/books_toscrape_com.py#import attrs from number_parser import parse_number from web_poet import AnyResponse, field, handle_urls from zyte_common_items import AggregateRating, AutoProductPage @handle_urls("books.toscrape.com") @attrs.define class BooksToScrapeComProductPage(AutoProductPage): response: AnyResponse @field def aggregateRating(self): rating_class = self.response.css(".star-rating::attr(class)").get() if not rating_class: return None rating_str = rating_class.split(" ")[-1] rating = parse_number(rating_str) if not rating: return None review_count_xpath = """ //th[contains(text(), "reviews")] /following-sibling::td /text() """ review_count_str = self.response.xpath(review_count_xpath).get() review_count = int(review_count_str) return AggregateRating( bestRating=5, ratingValue=rating, reviewCount=review_count )

This is what the code above does:

The

@handle_urlsdecorator indicates that this parsing code should only be used on thebooks.toscrape.comdomain.Tip

To write more complex URL patterns, check out the

@handle_urlsreference.Do not think too hard about the

@attrs.definedecorator. You will learn why it is needed later on.BooksToScrapeComProductPageis an arbitrary class name that follows naming conventions for page object classes. What’s important is that it subclassesAutoProductPage, a base class for page object classes that parse products.AutoProductPageuses Zyte API automatic extraction by default for allProductfields, so that you can override only specific fields with custom parsing code.response: AnyResponseasks to get an instance ofAnyResponsethrough dependency injection, that your code can then access atself.response.Similarly to

scrapy.http.TextResponse,AnyResponsegives you methods to easily run XPath or CSS selectors to get specific data.AnyResponsealso allows your code to work regardless of your extraction source, i.e. it will work for your 2 virtual spiders.The

@field-decoratedaggregateRatingmethod defines how to parse theProduct.aggregateRatingfield.If you inspect the HTML code of the star rating in book webpages, you will notice that it starts with something like:

<p class="star-rating Three">

So you get that

classvalue, then take the last word, which is the rating in English (e.g."Three"), and finally convert that into an actual number (e.g.3) using number-parser, and return that asaggregateRating.ratingValue.You get

aggregateRating.reviewCountfrom the corresponding row of the book details table.You return a hard-coded

5asaggregateRating.bestRating, since the value is unlikely to change, and if it ever does, it would likely break the parsing ofaggregateRating.ratingValueas well, so you would have to rewrite your parsing code anyway.

If you run the e-commerce spider template again, and you inspect the new content of

items.jsonl, you will see that now the right rating data is being extracted

from every book webpage.

Deploy to Scrapy Cloud#

Now that you have successfully customized your AI spiders project to improve its output for products from https://books.toscrape.com, and you have run the e-commerce spider template locally to verify that it works as expected, you can deploy your project to Scrapy Cloud, so that your virtual spiders use it:

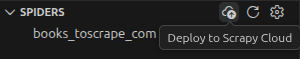

Select Web Scraping Copilot › Spiders on the sidebar and, on the view title, click the Deploy to Scrapy Cloud button.

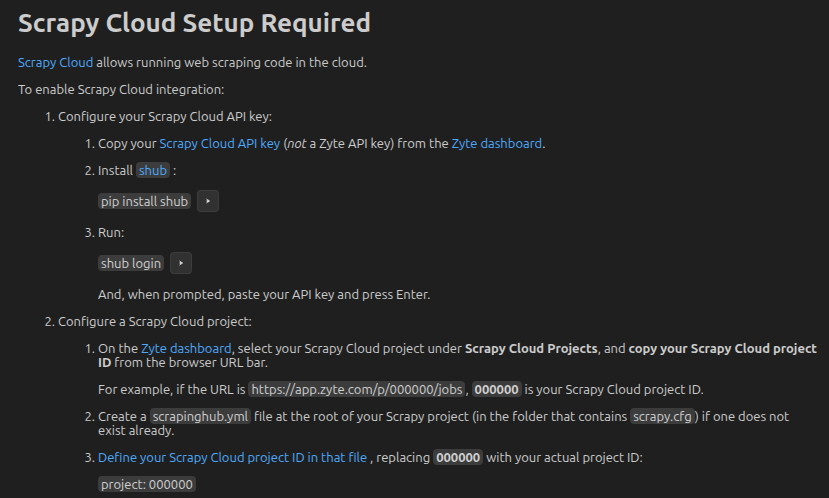

Complete the interactive Scrapy Cloud setup steps.

Add the following to

zyte-spider-templates-project/scrapinghub.yml:stacks: default: scrapy:2.13-20250721

Click the Deploy to Scrapy Cloud button again, and confirm.

Once you see a Run your spiders at: <link> line in the output, your AI

spiders project will have been deployed to your Scrapy Cloud project, replacing

the default AI spiders tech stack.

Now you can run a job of any of your virtual spiders to verify that

aggregateRating is now extracted as expected also in Scrapy Cloud.

Whenever you make new changes to your AI spiders project locally, remember that you need to re-deploy your new changes to your Scrapy Cloud project.

Note

The default AI spiders tech stack, the one you get when you select Zyte’s AI-Powered Spiders during project creation, is automatically upgraded after new releases of the zyte-spider-templates library.

In contrast, after you deploy your own code to your Scrapy Cloud project, the tech stack of that project is no longer updated automatically. You are instead in full control, and decide when and how to upgrade it.

More customization options#

Here are some of the many ways you can customize AI spiders through page objects:

Apply field processors.

Use data from AI parsing in your custom field implementations by reading it from

AutoProductPage.product.For example, to make product names all caps:

zyte_spider_templates_project/pages/books_toscrape_com.py#@field def name(self): return self.product.name.upper()

Replace

Productwith a custom item class with custom fields.Tip

It is recommended to stick to the standard item classes where possible, and use the

additionalAttributesfield to extract custom fields if their values can be strings.

Next steps#

You have learned how you can use page object classes to override, extend or replace AI parsing with custom parsing code for specific combinations of item types and URL patterns.

On the next chapter you will go further and create entire new spider classes based on AI spiders.