Run your first AI spider#

In this chapter of the Zyte AI spiders tutorial you will run your first AI spider in Scrapy Cloud.

Note

AI spiders do not require Scrapy Cloud, as you will see later on. However, Scrapy Cloud allows running AI spiders with minimum setup and no maintenance.

Create a project#

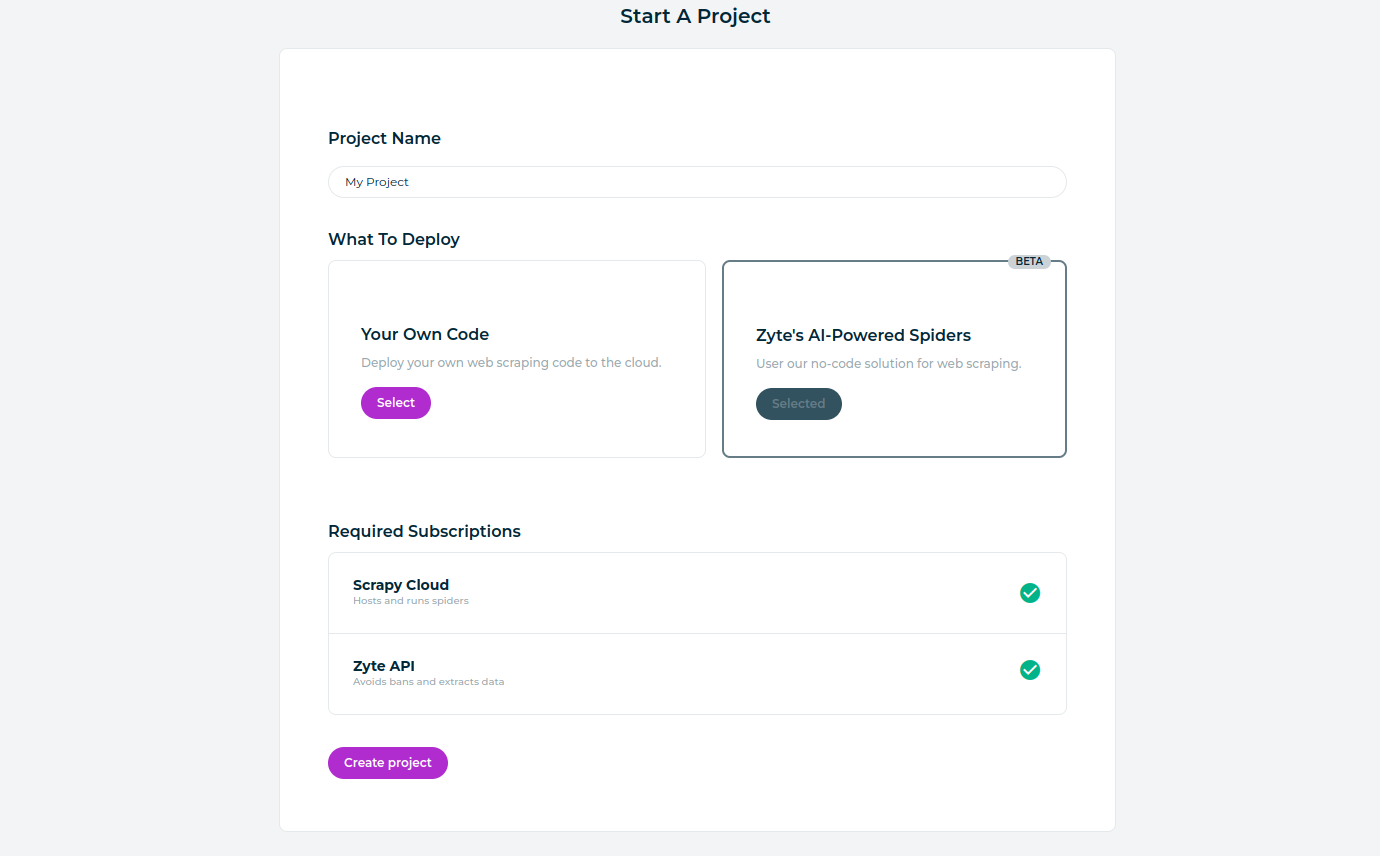

First create a Scrapy Cloud project with AI spiders:

Log into the Zyte dashboard.

Open the Start a Project page.

Enter a Project Name.

Click Select under Zyte’s AI-Powered Spiders.

Click Create project.

Create and run an e-commerce virtual spider#

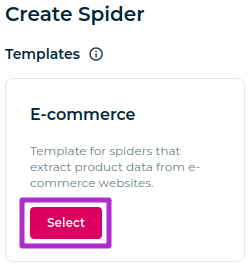

Once your project is created, you will find yourself in the Create Spider page of your new project. You will now create an e-commerce virtual spider:

Click the Select button on the E-commerce box.

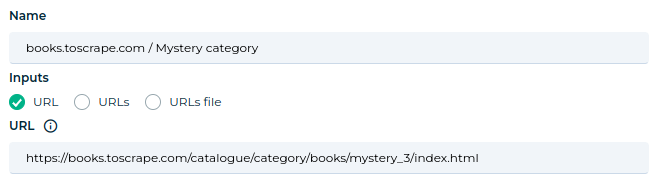

Fill the fields that show up as follows:

On the Name field, type “books.toscrape.com / Mystery category”.

On the Inputs / URL field, enter

https://books.toscrape.com/catalogue/category/books/mystery_3/index.html.

Note

By default, AI spider templates are limited to 100 requests. That is enough for this spider. However, when creating other spiders, remember to set the Max Requests field to a different value if needed. Use

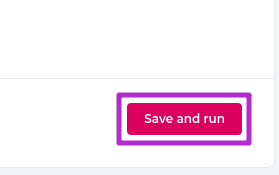

0to disable.On the bottom-right corner, click Save and run.

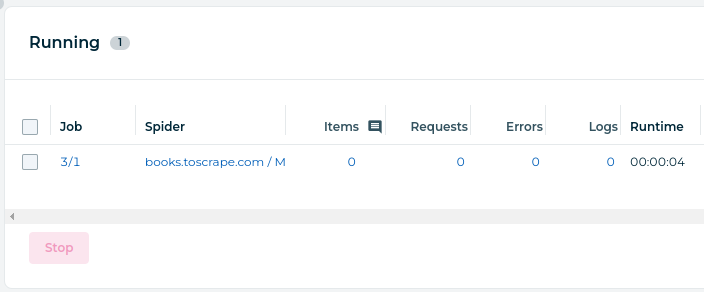

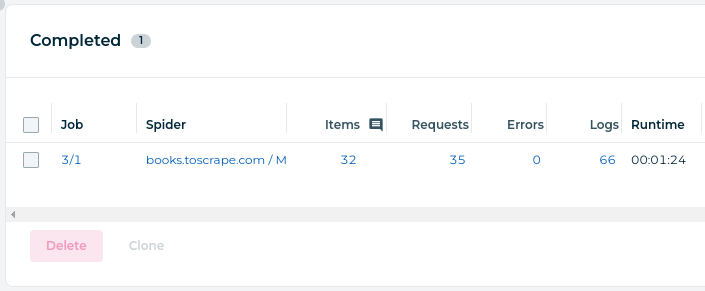

A Scrapy Cloud job will start running now, executing your virtual spider. You can find the running job under Running, or under Completed after it finishes running.

The job will visit the specified start URL, which is the first page of the Mystery book category on books.toscrape.com, and it will automatically find and visit the second page of that category and all book pages linked from both category pages.

Once the job finishes, click the number in the Items column to open a page with the extracted data items, which you can also download. It should be 32 items, the number of books available in that category.

Understand how the spider works#

What you have just run is a Scrapy spider that uses Zyte API automatic extraction to work automatically on any e-commerce website.

By default, this spider:

Requests productNavigation data from the specified start URL.

Requests product data for every URL from productNavigation.items. Every item in the job output is one of these product records.

Repeats these steps for every URL from productNavigation.nextPage and productNavigation.subcategories.

Next steps#

You are now familiar with the basics of AI spiders. You have used the E-commerce spider template and learned about its most important parameter: Inputs / URL.

On the next chapter you will learn about the next most important parameter of most AI spiders.