Start a Scrapy project#

To build your web scraping project, you will use Scrapy, a popular open source web scraping framework written in Python and maintained by Zyte.

Setup your project#

Install Python, version

3.10or higher.Create a

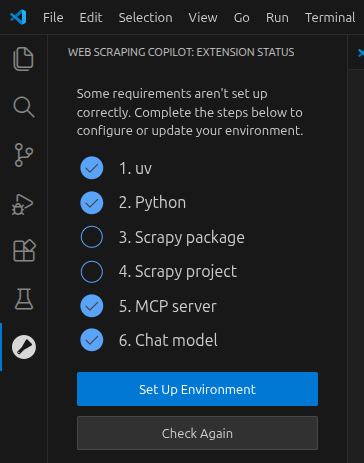

web-scraping-tutorialfolder and open it with Visual Studio Code.Select Web Scraping Copilot on the sidebar, and under Extension Status, complete the setup steps.

On the Python step, select Switch Interpreter › Create Virtual Environment › Venv and then select an installed Python executable.

Your web-scraping-tutorial folder should now contain the following folders

and files:

web-scraping-tutorial/

├── .venv/

│ └── …

├── project/

│ ├── spiders/

│ │ └── __init__.py

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ └── settings.py

└── scrapy.cfg

Create your first spider#

Now that you are all set up, you will write code to extract data from all books in the Mystery category of books.toscrape.com.

Create a file at project/spiders/books_toscrape_com.py with the following

code:

from scrapy import Spider

class BooksToScrapeComSpider(Spider):

name = "books_toscrape_com"

custom_settings = {

"CONCURRENT_REQUESTS_PER_DOMAIN": 8,

"DOWNLOAD_DELAY": 0.01,

}

start_urls = [

"http://books.toscrape.com/catalogue/category/books/mystery_3/index.html"

]

def parse(self, response):

next_page_links = response.css(".next a")

yield from response.follow_all(next_page_links)

book_links = response.css("article a")

yield from response.follow_all(book_links, callback=self.parse_book)

def parse_book(self, response):

yield {

"name": response.css("h1::text").get(),

"price": response.css(".price_color::text").re_first("£(.*)"),

"url": response.url,

}

In the code above:

You define a Scrapy spider class named

books_toscrape_com.You set custom values for

CONCURRENT_REQUESTS_PER_DOMAINandDOWNLOAD_DELAYto speed crawls during the tutorial. https://toscrape.com is a test site, so it is safe to do so.Your spider starts by sending a request for the Mystery category URL, http://books.toscrape.com/catalogue/category/books/mystery_3/index.html (

start_urls), and parses the response with the default callback method:parse.The

parsecallback method:Finds the link to the next page and, if found, yields a request for it, whose response will also be parsed by the

parsecallback method.As a result, the

parsecallback method eventually parses all pages of the Mystery category.Finds links to book detail pages, and yields requests for them, whose responses will be parsed by the

parse_bookcallback method.As a result, the

parse_bookcallback method eventually parses all book detail pages from the Mystery category.

The

parse_bookcallback method extracts a record of book information with the book name, price, and URL.

Tip

What if, instead of writing spider code manually, you could use AI to write parsing code for you? See AI-assisted web scraping tutorial.

Now run your spider:

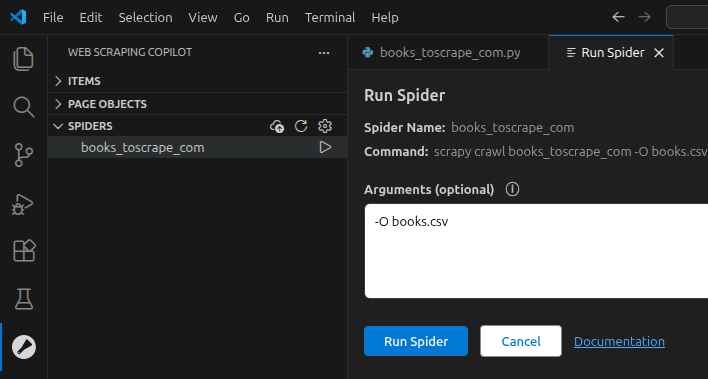

Select Web Scraping Copilot on the sidebar.

Expand the Spiders view. Click the Refresh button if your spider is not listed.

Click the Run Spider Locally button of your spider.

Paste the following in the Arguments field:

-O books.csv

Click Run Spider.

Once execution finishes, the generated books.csv file will contain records

for all books from the Mystery category of books.toscrape.com in CSV

format. You can open books.csv with any spreadsheet app.

Continue to the next chapter to learn how you can easily deploy and run you web scraping project on the cloud.