Generate parsing code with AI#

Now that your project is ready, you will use AI to generate code to parse book webpages from https://books.toscrape.com.

1. Generate an item class#

First, you need to define the type of data that you want to parse from each book page.

Tip

Select a somewhat smart model in the chat view, i.e. GPT-5 or similar. GPT-5 mini is OK if you prefer a non-premium model. GPT-4.1 is problematic for web scraping.

Ask the AI to:

Define a dataclass item called Book with title, price and url fields. Make them optional and of type str | None.

The AI should edit project/items.py to add:

project/items.py#from dataclasses import dataclass

@dataclass

class Book:

url: str | None = None

title: str | None = None

price: str | None = None

Tip

You can use any item type supported by Scrapy,

dataclass is one of many options.

You can also use a pre-made item type from

zyte-common-items, like

zyte_common_items.Product, instead of writing your own item type

from scratch.

2. Generate parsing code#

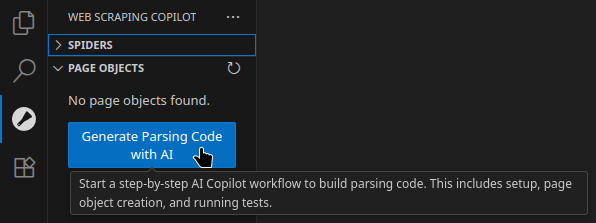

Select Web Scraping Copilot › Page Objects › Generate Parsing Code with AI:

The chat view will open in Web scraping mode, a prompt will be sent, and the AI will start assiting. It should:

Ask you for some input.

It usually detects the right item type to use and the right path to save your page objects (more on them later), but it always needs you to specify example target URLs.

You are generating a page object for book detail pages, so choose a few such URLs and share them in chat.

Help you set up scrapy-poet.

Note

You will only need to do this once per Scrapy project.

Create

project/pages/books_toscrape_com.pywith something like:from project.items import Book from web_poet import Returns, WebPage, field, handle_urls @handle_urls("books.toscrape.com") class BooksToscrapeComBookPage(WebPage, Returns[Book]): pass

Note

This is a page object class. It defines how to extract a given type of data (e.g.

Book) from a given URL pattern (e.g. thebooks.toscrape.comdomain).Generate test fixtures for the target example URLs.

Note

web-poet test fixtures are example inputs and expected outputs for a page object class. You can use them to test your code, and the AI can use them to generate the right parsing code.

Populate fixture expectations.

Note

The

output.jsonfile in test fixture folders is meant to contain the data that should be extracted from the URL of the test fixture.Generate parsing code for your new page object class.

Run automated tests to check that the generated parsing code extracts the expected data.

By the end, you should have a working page object class that can extract book data from any book URL from https://books.toscrape.com.

3. Create a spider#

Now that you have a working page object, it is time to implement a Scrapy spider that can use it.

Create a file at project/spiders/books.py with the following code:

from scrapy import Request, Spider

from project.items import Book

class BookSpider(Spider):

name = "book"

url: str

async def start(self):

yield Request(self.url, callback=self.parse_book)

async def parse_book(self, _, book: Book):

yield book

The spider expects a url argument, which you can pass to a spider with the

-a url=<url> syntax.

When a request targets the parse_book callback, scrapy-poet sees the Book type hint and injects a book

parameter built with your page object class.

Your spider can now extract book data from any book details page from

https://books.toscrape.com. For example, try running your book spider with the following Arguments:

-a url=https://books.toscrape.com/catalogue/soumission_998/index.html

-o books.jsonl

It will generate a books.jsonl file with the following JSON object:

{

"url": "https://books.toscrape.com/catalogue/soumission_998/index.html",

"title": "Soumission",

"price": "50.10"

}

You can also repeat step 2 for other book stores, and this spider will also work for them, no need to have separate spiders per website.

Continue to the next chapter to use AI to generate crawling code, to be able to write a spider that can crawl an entire book store, and not just a single book URL.